SQL Agent 🤖 - Building Your Personal AI Database Assistant

Table of Contents

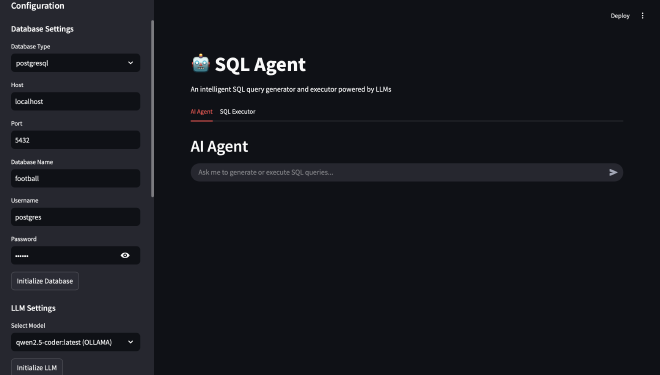

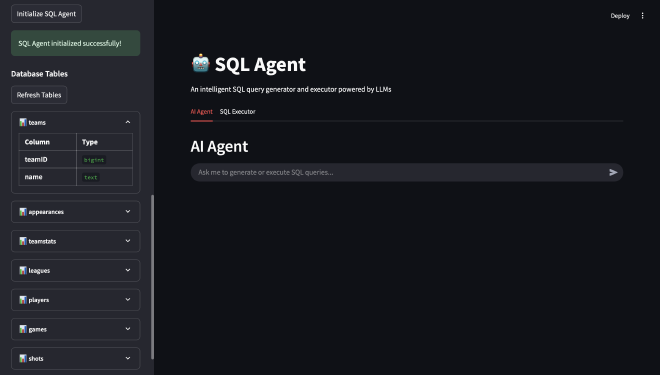

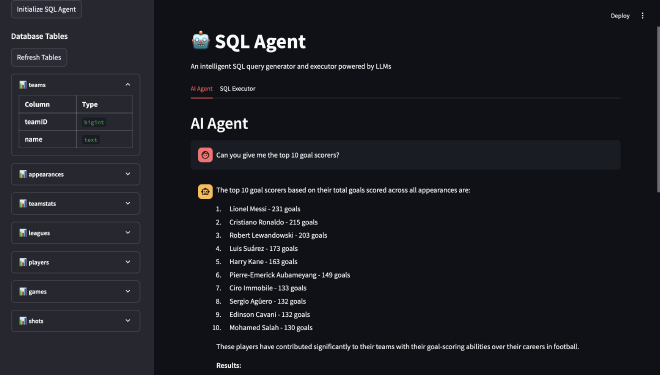

Databases are the backbone of modern applications, yet interacting with them often requires specialized knowledge of SQL. What if you could simply ask questions in plain English and get the data you need? That’s the promise of the SQL Agent I’ve built—an intelligent assistant that converts natural language queries into SQL, executes them against your database, and presents the results in an intuitive interface. Beyond just query conversion, this tool provides detailed error feedback, data visualization, and a direct SQL execution mode for power users. By combining the language understanding capabilities of Large Language Models with database connectivity, this project makes database interaction accessible to everyone, from business analysts without SQL knowledge to experienced developers looking to streamline their workflow.

This blog details how I built this project, walking through the architecture, key components, and implementation details with code examples. Let’s dive into how you can create your own SQL Agent to revolutionize your database workflows!

Demo #

Introduction #

The Database Access Challenge #

Despite the ubiquity of databases in every organization, meaningful access to data remains limited to those with technical skills. This creates several challenges:

- Knowledge Gap: Business users often depend on technical teams for data extraction, creating bottlenecks and delays.

- Query Complexity: Even for those familiar with SQL, crafting complex queries can be time-consuming and error-prone.

- Debugging Difficulty: When queries fail, pinpointing the exact cause can be challenging without proper error handling.

- Results Interpretation: Raw data needs proper visualization to derive meaningful insights.

The SQL Agent addresses these challenges by providing a natural language interface to databases, comprehensive error handling, and built-in visualization capabilities—effectively democratizing database access across technical and non-technical users alike.

Technical Foundation #

The SQL Agent builds on several powerful technologies to deliver its functionality:

-

LangChain: This framework orchestrates the interactions between the user, the language model, and the database. It provides the tools to build an agent that can make decisions about how to handle queries, execute SQL, and process results. LangChain’s agent framework is particularly valuable for creating a system that can reason about errors and adjust its approach accordingly.

-

LLM Integration: The SQL Agent supports both OpenAI models (like GPT-4) and local models through Ollama. This flexibility allows users to choose between cloud-based power and local privacy according to their needs. The LLMs provide the critical natural language understanding capability, translating user questions into SQL queries and explaining results in plain language.

-

SQLAlchemy: This powerful SQL toolkit and Object-Relational Mapping (ORM) library provides a flexible and secure way to connect to different database systems. It handles the execution of SQL queries and returns results in a structured format that can be easily processed.

-

Streamlit: The front-end interface is built with Streamlit, which allows for rapid development of interactive web applications. Streamlit’s components make it easy to display query results, visualizations, and provide user controls without complex web development.

Key Features #

Natural Language to SQL Translation #

The heart of the SQL Agent is its ability to transform natural language questions into valid SQL queries. This is accomplished through a carefully designed agent architecture using LangChain:

def initialize_agent(self, db_manager) -> bool:

"""Initialize the agent with SQL tools and chains."""

try:

if not self.llm:

st.error("Please initialize the LLM first")

return False

self.db_manager = db_manager

# Define tools

tools = [

Tool(

name="execute_sql_query",

func=self.execute_sql_tool,

description="""Useful for when you need to execute a SQL query.

Input should be a raw SQL query WITHOUT any surrounding quotes or function call syntax.

Example: SELECT * FROM table_name

NOT: ```sql SELECT * FROM table_name``` or "SELECT * FROM table_name"

"""

),

Tool(

name="get_schema",

func=self.get_schema_tool,

description="Useful for when you need to understand the database schema. Returns tables and their columns. No input is required for this tool."

)

]

# Define prompt template for the agent

prompt_template = """You are a SQL expert that helps users query databases.

When asked a question, think through what tables and columns you need to query, then use the provided tools to execute SQL queries and return the results.

Always explain your reasoning in a clear, step-by-step manner. First understand what the user is asking for, then decide what SQL query would get that information, then execute it.

{schema_context}

IMPORTANT SQL QUERY GUIDELINES:

1. ALWAYS quote column and table names with double quotes (e.g., "teamID" not teamID)

2. For string matching, put string literals in single quotes: WHERE name = 'example'

3. For aggregate functions like COUNT, AVG, SUM, etc., make sure to use an alias for readability

For your final answer, provide:

1. A natural language explanation of the results

2. DO NOT include the SQL query in your answer - it will be displayed separately in the UI

Tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action (must be a string)

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: your final answer with explanation ONLY - do not include the SQL query

IMPORTANT: When using tools, you must provide ONLY the tool name without any parentheses or function call syntax. For example, use "get_schema" not "get_schema()".

Begin!

Question: {input}

{agent_scratchpad}"""

This approach uses a ReAct (Reasoning + Acting) pattern where the agent thinks through the problem, decides what actions to take, and executes them. The agent has access to two primary tools:

- A tool to execute SQL queries

- A tool to retrieve the database schema

When a user asks a question like “How many customers made purchases last month?”, the agent:

- Reasons about the tables and relationships needed

- Fetches the schema to understand the database structure

- Formulates a SQL query that answers the question

- Executes the query and presents the results in natural language

Advanced Error Handling and Debugging #

What sets this SQL Agent apart is its sophisticated error handling system. Rather than simply failing when a query has issues, the agent provides detailed diagnostics and attempts to self-correct:

# Check if the DataFrame has error information

if df is not None and hasattr(df, 'attrs') and 'is_error' in df.attrs and df.attrs['is_error']:

error_msg = df.attrs.get('error', 'Unknown SQL error')

if debug_mode:

debug_info.append(f"SQL Error: {error_msg}")

# Format error message for better readability

formatted_error = f"Error executing SQL query: {error_msg}"

# Add more context for common SQL errors

if "syntax error" in error_msg.lower():

formatted_error += "\nThis appears to be a syntax error. Please check your SQL syntax."

elif "does not exist" in error_msg.lower() and "column" in error_msg.lower():

formatted_error += "\nThe specified column does not exist in the table."

elif "does not exist" in error_msg.lower() and "table" in error_msg.lower():

formatted_error += "\nThe specified table does not exist in the database."

This error handling system:

- Captures database errors during query execution

- Analyzes the error message to identify the cause

- Adds human-readable context to help users understand what went wrong

- Passes the error information back to the LLM agent for potential correction

The result is a more resilient system that helps users learn from mistakes and understand database errors better. When a query fails, the system doesn’t just say “error” - it explains what happened and often suggests how to fix it.

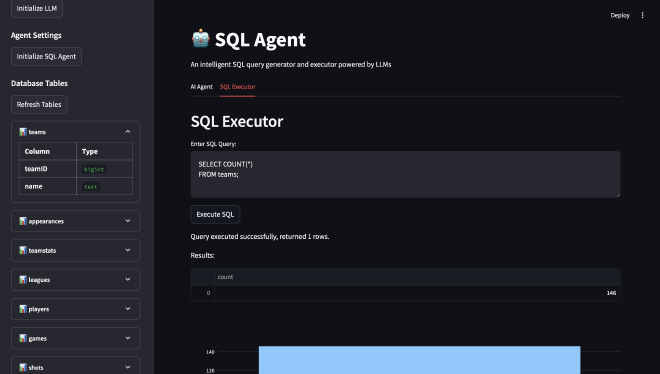

Direct SQL Execution Interface #

For users who already know SQL or want more control, the SQL Agent provides a direct SQL execution interface:

def sql_executor_tab():

"""Content for the SQL Executor tab"""

st.header("SQL Executor")

# Initialize session state variables if they don't exist

if "current_query" not in st.session_state:

st.session_state.current_query = ""

# Handle the Clear Results action

if "clear_results_clicked" in st.session_state and st.session_state.clear_results_clicked:

# Reset the clear button state

st.session_state.clear_results_clicked = False

# Clear results

if "sql_executor_result" in st.session_state:

del st.session_state.sql_executor_result

st.session_state.current_query = ""

# Direct SQL execution

direct_sql = st.text_area("Enter SQL Query:", height=100, key="sql_input")

execute_button = st.button("Execute SQL")

This interface provides several advantages:

- Immediate Execution: Write SQL directly and see results instantly

- Query Refining: Use the natural language interface to generate a starting query, then refine it manually

- Learning Tool: See how the AI converts questions to SQL, then modify the generated queries to learn SQL

- Detailed Feedback: Get the same sophisticated error handling and debugging information

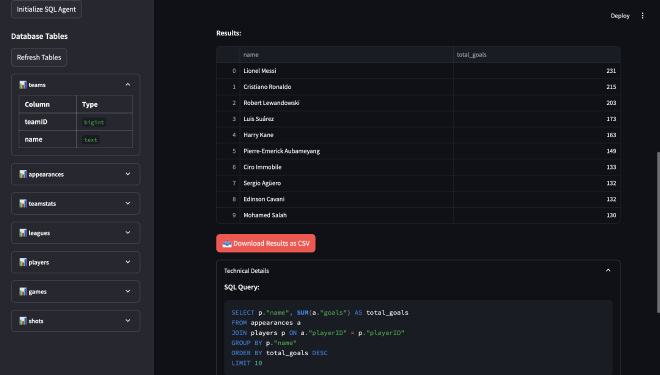

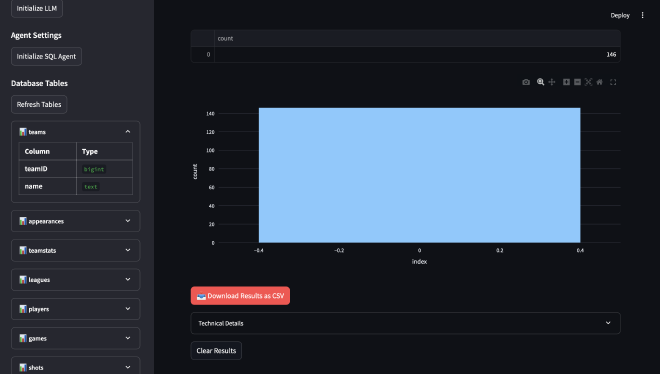

Visualization and Result Processing #

Beyond simply returning data, the SQL Agent automatically determines appropriate visualizations for query results:

def generate_visualization(df):

"""Generate an appropriate visualization based on the dataframe content."""

try:

# Skip visualization for empty dataframes or those with too many columns

if df is None or df.empty or len(df.columns) > 10:

return

# Determine the number of numeric columns

numeric_cols = df.select_dtypes(include=['number']).columns.tolist()

# If there are two numeric columns, create a scatter plot

if len(numeric_cols) == 2:

st.subheader("Data Visualization")

fig = px.scatter(df, x=numeric_cols[0], y=numeric_cols[1], title=f"{numeric_cols[1]} vs {numeric_cols[0]}")

st.plotly_chart(fig, use_container_width=True)

# If there is one numeric column and one categorical, create a bar chart

elif len(numeric_cols) == 1 and len(df.columns) >= 2:

categorical_cols = [col for col in df.columns if col not in numeric_cols]

if categorical_cols:

st.subheader("Data Visualization")

categorical_col = categorical_cols[0]

# Group by the categorical column and calculate mean of numeric column

if len(df[categorical_col].unique()) <= 10: # Limit to 10 categories

fig = px.bar(

df,

x=categorical_col,

y=numeric_cols[0],

title=f"{numeric_cols[0]} by {categorical_col}"

)

st.plotly_chart(fig, use_container_width=True)

The visualization system:

- Analyzes the returned data structure

- Determines the most appropriate visualization type

- Automatically generates charts using Plotly

- Provides user-friendly export options for further analysis

This makes the SQL Agent not just a query tool but a complete data exploration system that helps users derive insights from their database.

Future and Potential Improvements #

While the current SQL Agent provides robust functionality, several enhancements could take it to the next level:

Query History and Optimization #

Implementing a system to track query history would allow the agent to learn from past interactions and improve future queries. This could include:

- Storing successful queries for similar questions

- Analyzing query performance and suggesting optimizations

- Building a personalized “frequently asked questions” system for each user

Predictive Query Completion #

Similar to how modern code editors offer code completion, the SQL Agent could provide predictive SQL completion:

- Suggesting table and column names as users type

- Offering common query patterns based on context

- Auto-completing JOINs based on foreign key relationships

Data Security Enhancements #

For enterprise deployment, additional security features would be valuable:

- Role-based access control to limit data visibility

- Query whitelisting to prevent potentially harmful operations

- Audit logging of all queries for compliance purposes

- Data masking for sensitive information

Cross-Database Federation #

Expanding the agent to work across multiple databases simultaneously would unlock powerful capabilities:

- Querying data across different database systems

- Performing joins between tables in different databases

- Creating virtual views that combine data from multiple sources

Challenges and Learnings #

Building the SQL Agent presented several interesting challenges and valuable lessons:

SQL Dialect Handling #

One of the most significant challenges was handling different SQL dialects across database systems. PostgreSQL and MySQL have subtle differences that can cause queries to fail. The solution involved:

- Detecting the database type during connection

- Adjusting generated queries to match the specific dialect

- Creating a robust error handling system that could interpret errors from different database systems

This highlighted the importance of abstraction layers that can handle system-specific details without exposing them to users.

Error Propagation and Recovery #

Initially, when SQL errors occurred, the system would simply fail silently or with minimal information. Building a comprehensive error handling system required:

- Capturing and preserving error information at the database layer

- Parsing and enhancing error messages to make them user-friendly

- Properly propagating errors through the component layers

- Feeding errors back to the LLM agent to enable self-correction

This taught me the value of designing for failure from the start and treating error handling as a first-class feature rather than an afterthought.

LLM Context Management #

Another challenge was ensuring the LLM had sufficient context about the database schema without exceeding context limits. The solution involved:

- Dynamically fetching only the relevant schema information

- Caching schema data to reduce repeated lookups

- Carefully constructing prompts to include the most valuable context

- Implementing a system for the agent to explicitly request additional information when needed

This experience emphasized the importance of thoughtful prompt engineering and context management when working with LLMs.

Conclusion #

The SQL Agent represents a powerful bridge between natural language and databases, democratizing access to data and streamlining database interactions for users of all technical levels. By combining the natural language understanding of LLMs with robust database connectivity and intuitive visualization, this tool transforms how we interact with data.

Beyond its practical utility, this project demonstrates the potential of AI agents to enhance specialized technical domains. Rather than replacing human expertise, these systems amplify it—allowing analysts to focus on insights rather than syntax, developers to work more efficiently, and organizations to unlock the full value of their data.

The future of database interaction lies not in making everyone learn SQL, but in making databases understand us—and the SQL Agent is a significant step toward that vision.